Vibe-Coding: The promise and the limits

We’re very bullish on vibe-coding. But backend coupling, token burn, and compounding hallucination errors are real. Here are 5 problems we keep hitting in production.

I’m Ivan, co-founder/CEO of Prompt.Build. Before this, we were BeyondRisk AI-6 building enterprise AI systems. We adopted vibe-coding tools early to move faster. I’m very bullish - and I think we’re still at the very beginning of what’s possible. 🚀 This post documents the pains we keep hitting when real teams try to ship production apps with vibe-coding. No fixes in this post; I’ll share how we attempt to tackle them in my next post.

Also available in: 日本語 | PT-BR

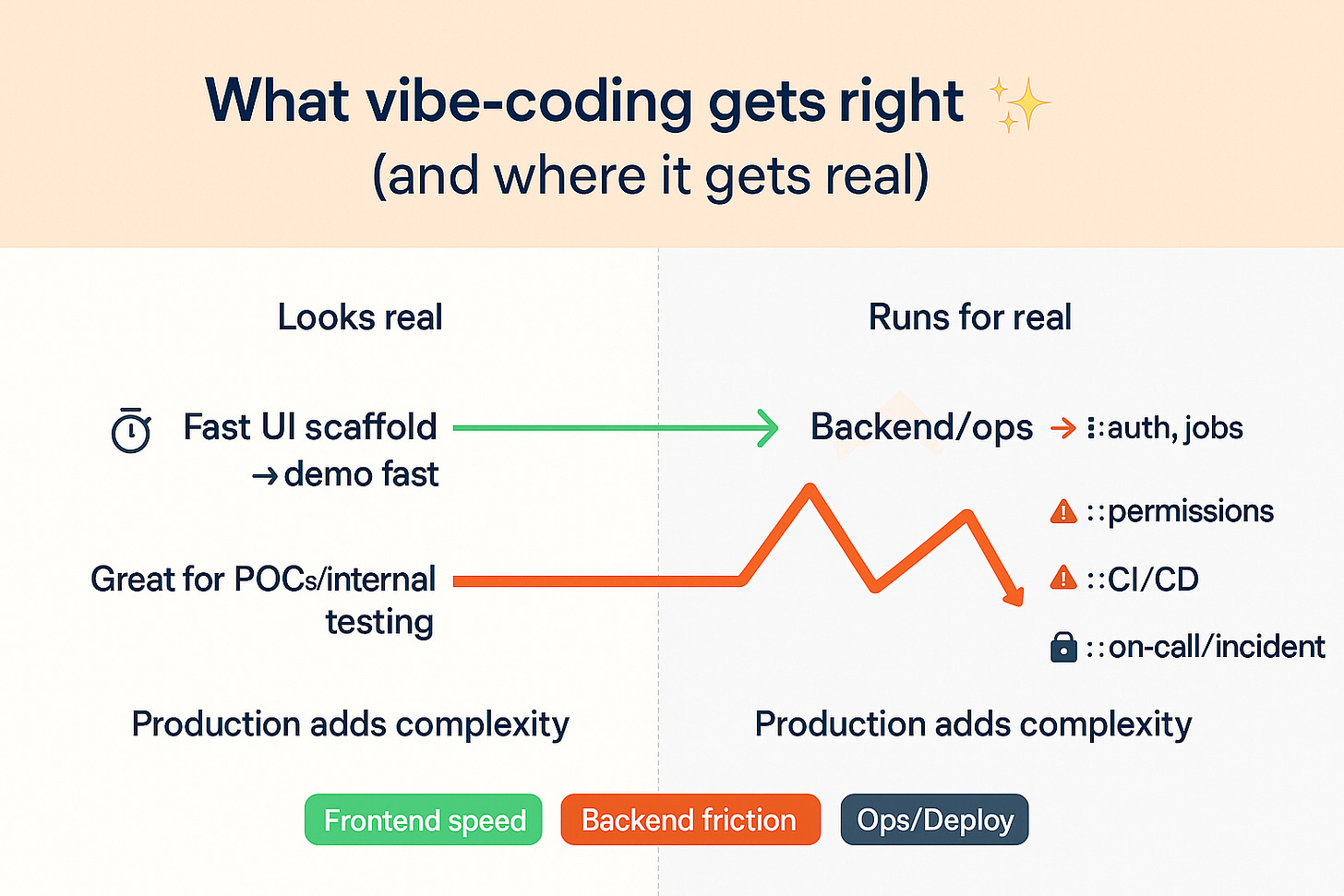

What vibe-coding gets right (why we’re bullish) ✨

Vibe-coding - telling an AI what you want and letting it build - makes frontends fast. You can go from nothing to a real-looking app in one session: pages show up, navigation works, forms and tables appear, and the design looks consistent without long debates.

It also shrinks the path from idea → screen. Instead of tickets, wireframes, and handoffs, you describe what you need and see it right away. That quick loop makes you try more: test two layouts, swap components, tweak the copy before you spend days of engineering time.

And it’s no longer frontend-only. Most modern vibe-coding platforms wire in a ready backend - often via Supabase (managed or OSS) - so you can get auth, Postgres, row-level security, storage, and a clean SDK up on day one. That means demos and internal tools can feel “real” end-to-end, not just clickable shells.

✅ Bottom line: vibe-coding shines at speed, iteration, and shared understanding - and increasingly, at getting you to a functioning full-stack prototype demo quickly.

But once you leave the UI layer, the vibe changes.

The 5 problems we keep hitting in production ⚠️

1) Vibe-coding speed vs backend gaps 🔧

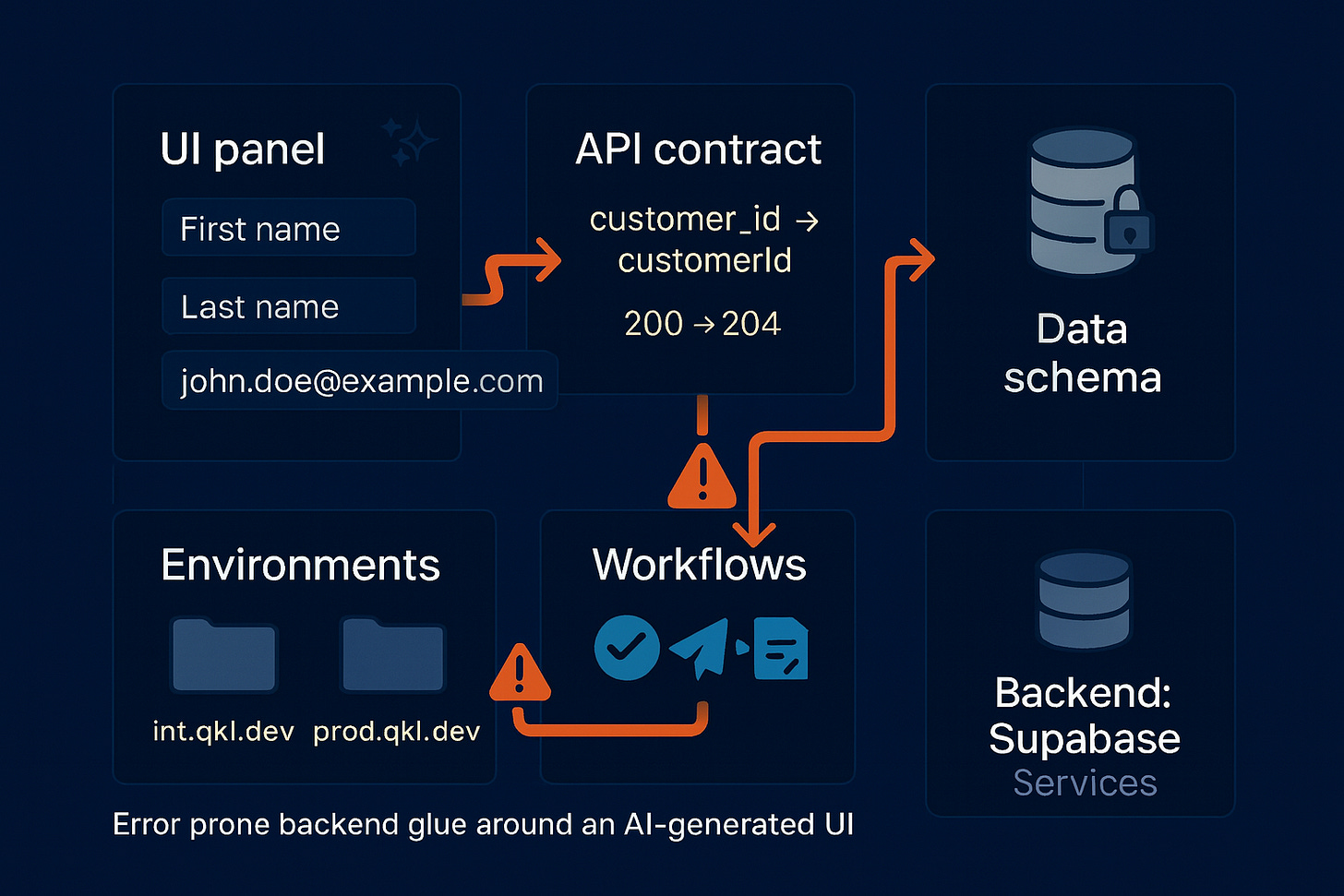

It’s true: most vibe-coding platforms now ship with a ready backend (often Supabase - auth, Postgres, RLS, storage, functions). So the issue isn’t “no backend.” It’s the wiring between the fast-changing UI and the backend contracts once real logic appears.

Where we keep seeing production pain:

🔍 Silent drift (UI vs API).

Tiny differences blow things up later:customer_idvscustomerId, UI expects 200 but the API returns 204, or a list changes from{ data: [] }to just[]. A quick backend tweak can make the screen read the wrong fields (idvsuser_id) without an obvious error.🧩 Screens fall out of sync.

You fix “Edit Customer,” but “Orders” still uses the old data shape. Without one source of truth, each screen drifts a bit in its own way.🗄️ Schema changes don’t land everywhere.

Rename a column or tighten an enum for one place, and other pages/functions keep the old version - unless migrations ship together and the pipeline catches it. Supabase’s own guidance pushes migrations + CI to avoid exactly this class of errors.🌗 Staging vs prod differences.

Keys, base URLs, storage buckets, or policy switches aren’t the same. Preview works; prod throws 401/403/404. Classic “it worked on my branch” fire drill.🔌 Workflow edges are brittle (jobs, webhooks, queues).

The UI can say “Approve → Notify → Reconcile,” but wiring serverless functions, cron jobs, and webhook signatures (Stripe, Slack, GitHub) is brittle. Rate limits, retries, and idempotency aren’t handled by a prompt.

We often see issues happening once a project has 3-5 external integrations (payments, comms, identity, storage, analytics). That’s enough to trigger drift.

✅ Bottom line: shipping a backend isn’t the blocker anymore; coordinating UI ↔ API ↔ data contracts is. The faster the UI iterates, the easier it is for endpoints, schemas, and policies to fall out of sync - especially as integrations grow beyond CRUD (payments, audit logs, third-party APIs, background jobs).

2) Supabase coupling 🧩 and why “export & continue” still isn’t code you truly own

Most modern vibe-coding platforms wire into Supabase (managed or OSS) for a day-one backend: Postgres + Auth + RLS + Storage + Edge/Functions + Realtime. That’s a huge win - login, tables, policies, and files in hours, not weeks, and demos feel real end-to-end.

Our view, though:

True vibe-coding should generate code you own - front-end and back-end.

When the backend lives inside a provider, you don’t fully “own” the primitives; you bind to how that provider implements them (auth/session shape, RLS policy style, SQL extensions, storage paths & ACLs, Realtime channels, client SDK semantics).

So even if you export from a platform (Lovable/Bolt/Replit) and continue in Claude Code/Cursor/Codex or another assistant, you’re typically exporting front-end code around a provider-shaped backend. That’s not bad - it’s the natural cost of moving fast on a great stack - but it’s attachment, not independence.

Where this shows up:

Portability work later. Moving to vanilla Postgres/RDS/Aurora or a different backend means remapping policies, auth/session handling, storage semantics, and SDK calls.

Ops/observability stay provider-shaped. Incidents, logs, metrics, quotas, and runbooks follow the provider’s patterns; swapping means retraining and re-tooling.

Customization ceiling. You can’t always modify the underlying auth/queue/storage internals the way you would if they were generated into your repo/infra.

We’re not anti-Supabase - we’re pro-ownership. It’s an excellent fast-start backend. We just call the coupling early so teams can plan portability and exit paths - or decide to generate/own more of the backend code when it matters.

Practical take: use Supabase confidently for speed, and keep escape hatches:

Treat auth and data as interfaces (thin auth boundary + data-access layer).

Wrap storage/webhooks/queues behind your functions so URLs/signatures/providers can change.

If ownership is critical, target a path where your vibe-coding flow can emit the backend primitives as code/infrastructure you can edit, review, and redeploy anywhere.

3) Token-burn during the debugging loop 🔥💸

Even with newer cost controls like prompt caching from OpenAI and Anthropic, token spend during iterative debugging is still hard to predict. Caching discounts repeat context, but real-world loops still include retries, tool calls, and non-local edits that re-send large context - so two “tiny fixes” can differ by 10–50× in tokens depending on what the model decides to touch and how often context is reloaded.

🔥 Why the burn persists today:

Non-deterministic edits. A small prompt often triggers broader rewrites across adjacent files or types, forcing larger contexts to be re-processed on the next pass. (Vendors mitigate with caching/credits, but they don’t eliminate wide diffs.)

Retry/tool thrash. Rate limits, schema mismatches, or tool re-plans re-stream the same (or larger) context. Caching helps but doesn’t cover every path or cache miss.

Pricing signals reflect the variance. Platforms are shifting to usage/effort-based or token-tier pricing precisely because workload can swing widely between “one and done” and “multiple exploratory passes.” Users frequently report blowing through token or credit pools faster than expected.

🤷♂️ Where the uncertainty bites:

You can’t reliably forecast cost per fix - the same-looking change can fan out unpredictably.

You pay just to confirm “nothing else changed,” since verification itself re-consumes context.

Teams struggle to budget the build phase because spend tracks the number and breadth of regeneration rounds, not just feature count.

✅ Bottom line: the pain isn’t only expense - it’s lack of transparency and predictability. Even with caching discounts, developers lack a stable unit of work to estimate “how much will this fix cost?” during active iteration.

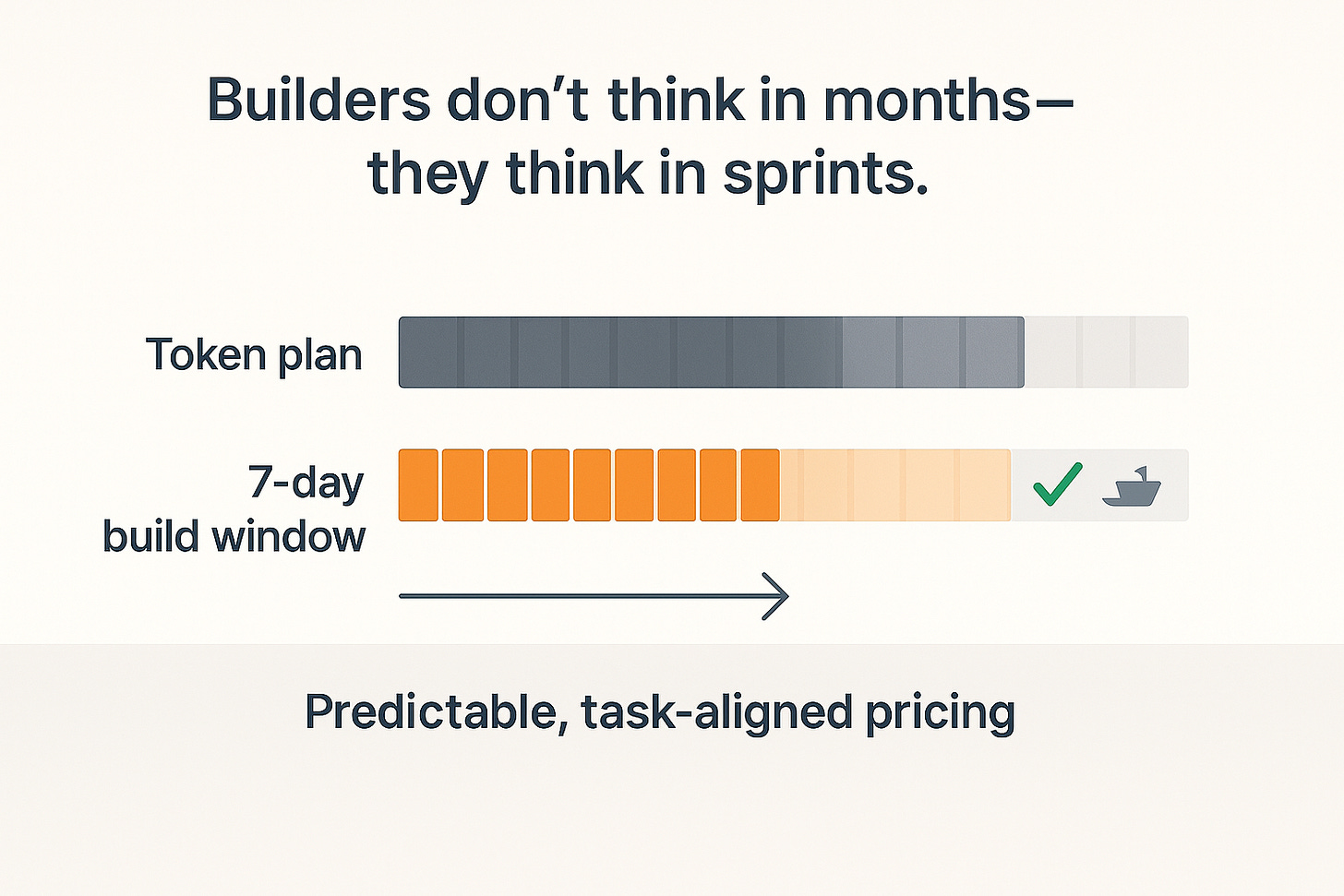

4) Monthly subscription plans don’t match how we build 🗓️⏱️

Most platforms sell monthly plans with token limits. Most teams don’t build all month. They sign up, build hard for 5–10 days, ship a demo, export to an AI code assistant (Cursor, Claude Code, etc.), and stop. Users don’t want a calendar - they want a predictable way to pay for a chunk of work.

🧠 Why this hurts users:

You pay for idle time. After the push week, the rest of the month sits unused.

Budgeting is messy. A small fix can eat more tokens than expected; cost per task isn’t clear.

Wrong unit of value. You’re forced to buy time (a month) instead of work (build attempts, regen rounds, a deployable outcome).

Context switches. After exporting to code assistants, you’re still paying for a plan you don’t need.

✅ What users actually want:

Pay for a build window, not a month - e.g., 3–7 day passes.

Clear units of work (e.g., N regenerations, M contract checks, one deployable build).

No penalty for pausing. Stop, come back later, pick up where you left off.

“Builders don’t think in months. They think in sprints - ‘get this feature working, then pause.’ Pricing should match that: pay for a focused build, not for a calendar page.”

✅ Bottom line: users need predictable, task-aligned pricing - pay for the work you do in short bursts, then pause. Monthly + tokens makes simple projects feel expensive and hard to plan.

5) Compounded Hallucination Errors: Why Small LLM Mistakes Snowball 🌀

Multi-step prompting can amplify small mistakes. LLMs often fail to reliably self-correct without external feedback; in many cases, “self-correction” loops can even degrade the answer quality. In multi-turn settings, errors tend to compound as context shifts and non-determinism introduces fresh inconsistencies.

🧪 Why this happens today:

Self-correction limits. Studies show intrinsic self-correction (model fixes itself from its own output) is unreliable and can worsen reasoning.

Non-determinism between runs. Even “deterministic” settings yield variable outputs across attempts, so a fix in step N may introduce a new discrepancy in step N+1.

Long-context drift. The longer the edit chain, the more latent drift accumulates and the harder it is to detect or reverse it.

🧵 What it feels like for teams:

Names, shapes, and states keep almost matching - but not quite - creating a patchwork that’s brittle under tests and migrations.

“Re-ask to fix” triggers new side effects elsewhere, so you burn cycles verifying stability instead of shipping.

After a few days, you’re debugging the drift itself, not the original feature.

“One small wrong turn can cascade. If your only tool is ‘ask again,’ you can end up chasing the model’s last mistake instead of fixing the real one.”

✅ Bottom line: compounding hallucination isn’t a rare edge case - it’s a known behavior of multi-turn, self-refining loops. Without an external source of truth (contracts, tests, verification passes), “just prompt again” often spreads the error instead of sealing it.

A special note: “Spec-driven agentic flows” are necessary, but not enough 📐

We’re seeing a new wave of spec-driven, agentic IDEs / “software factories.” Examples include Amazon Kiro, 8090.ai (Chamath’s “Software Factory”), and GitHub’s Spec Kit. The trend is right: add structure - PRDs, specs, tasks - into the loop.

What we agree with:

Spec-driven flows are necessary. They cut chaos, give agents a plan, and reduce obvious mismatches.

Reality check:

They don’t erase hallucinations or non-determinism—especially as apps grow or when you’re editing day after day. Self-correction loops often don’t converge, long contexts drift, and small fixes can still create new inconsistencies. Structure helps; it doesn’t end the problem.

How we do it (and what we’ve learned):

At Prompt.Build, we also run spec-driven agentic flows (PRD → tasks → contracts → code). This reduces mismatch. But at scale, you still see compounded hallucination errors and context drift unless you add guardrails.

“Specs are necessary, not sufficient. They point the model in the right direction; guardrails keep it from drifting when the app gets big and the edits pile up.”

✅ Bottom line:

Spec-driven agents are the right next step - but not enough on their own. At Prompt.Build, we go beyond spec-driven flows with verification and portability guardrails to keep UI/API/schema in lockstep and stop drift before it reaches prod. I’ll break down how we do this in my next post.

Quick FAQ ❓

What is vibe-coding?

Using AI to describe what you want and having the tool build most of the app for you - especially the screens - very quickly.Is vibe-coding good?

Yes for speed and quick demos and prototyping. It gets tricky when the screens and the backend stop agreeing with each other.What are the downsides?

Little mismatches pile up (names, data shapes, status codes), fixes can burn a lot of tokens, and you can get tied to one backend provider.Is vibe-coding free?

No. You pay for tokens. A tiny fix can trigger a big re-generation and cost more than you expect.Which tool is “best”?

Pick the one that lets you export real code and run it elsewhere later. Portability matters more than the logo.Will vibe-coding replace programmers?

No. People still decide the data model, security rules, how things are deployed, and what “done” looks like.What is “contract drift”?

When your UI and API stop matching - one expectscustomerId, the other sendscustomer_id; one returns 200, the other expects 204. Things look fine until they break.Why do tokens spike while debugging?

A small change can make the AI rewrite nearby files and re-send large context in tool calls. Two similar fixes can cost very different amounts.Why don’t monthly plans fit how people build?

Most teams work in short bursts (a few days), not all month. They want to pay for a build window or a clear chunk of work - not for a calendar month.What are “compounded hallucination errors”?

A tiny AI mistake leads to another “fix,” which changes something else, and the errors snowball over time.Are spec-driven agent flows enough?

They help a lot, but not by themselves. You still need checks to keep things aligned.How do I avoid backend lock-in?

Treat your auth and data like plug-in parts. Don’t scatter provider-specific calls everywhere; keep them in one place so you can move later.

Why I’m writing this (and what’s next) 🚀

We founded Prompt.Build because we love what vibe-coding unlocked - and we kept hitting the same walls after the frontend. In my next post, I’ll share how we go beyond spec-driven agentic flows with a single, machine-checkable contract layer, atomic migrations, and contract-aware tests - so builds stay reproducible, portable, and fast without wasting tokens.

If you’ve hit any of these issues, I’d love to hear your story - leave a comment below or just reply to this email (I read every one). And if you want the follow-up, subscribe so it lands in your inbox. 🔔

— Ivan, co-founder/CEO, Prompt.Build

https://prompt.build